Advertisement

Machine learning isn't just about algorithms or data—it often depends on probability. “Stochastic” might sound abstract, but in this field, it means systems that allow randomness in how they learn or make decisions. Rather than always giving the same answer for a given input, these models introduce variation. That’s not a weakness—it’s a method for better learning.

This randomness helps models deal with noisy data, avoid rigid thinking, and handle uncertainty. It’s one of the reasons modern systems adapt well to real-world situations, where outcomes can’t always be predicted with complete certainty.

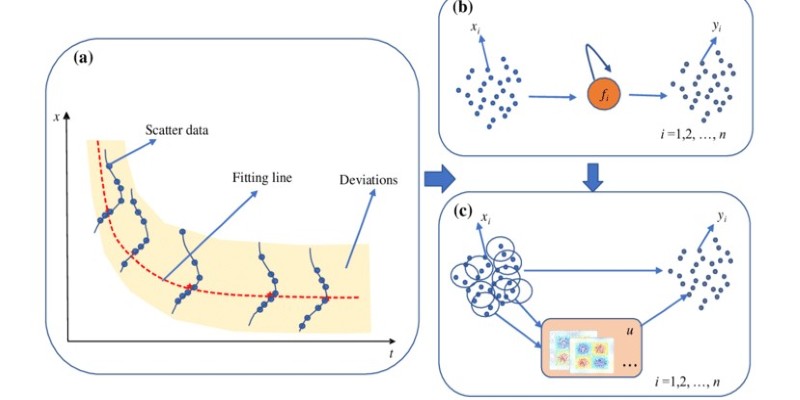

In machine learning, “stochastic” describes any process that includes randomness. This might happen during training, inference, or model design. A simple example is stochastic gradient descent (SGD), which uses random samples of data to update a model instead of full-batch calculations. This makes training faster and often leads to better generalization.

The opposite of stochastic is deterministic. A deterministic model always gives the same result for a given input. In contrast, a stochastic process can vary from one run to another. That might seem unstable at first, but over many iterations, the randomness acts like a tool. It helps the system explore more paths, which reduces the risk of settling for poor solutions.

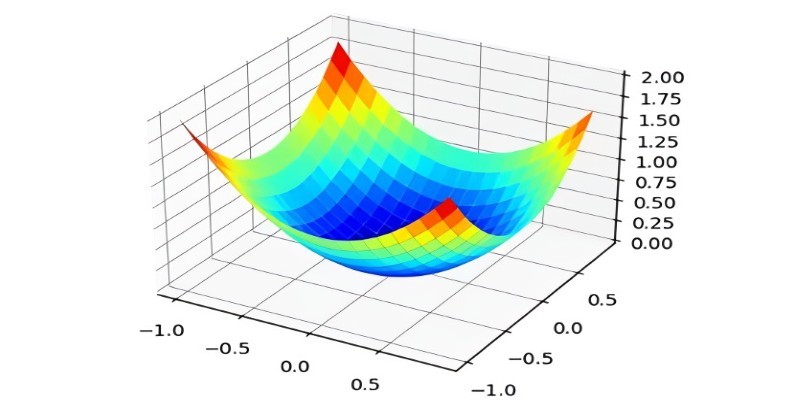

The randomness used isn’t uncontrolled. It follows defined probability distributions, guiding the process while still offering variety. Stochastic methods let models escape local minima—points where they might get stuck during training—by introducing enough randomness to keep moving forward. In practical terms, it’s like learning from different versions of a problem rather than practicing the same one over and over.

Training machine learning models usually involves minimizing a loss function. Stochastic methods help with this by introducing random variation during training. SGD, for instance, doesn’t use the whole dataset to compute gradients. It uses mini-batches chosen at random. This speeds up training and introduces noise that helps models generalize better, especially in large datasets.

That noise isn’t a flaw. It actually prevents overfitting. When a model overfits, it learns the training data too well, failing to perform on new inputs. The randomness in SGD helps avoid that by constantly shifting the training path slightly. This forces the model to learn broader patterns rather than memorizing details.

Dropout is another example. During training, it randomly ignores some neurons in the network. This discourages reliance on specific paths, making the system more adaptable. Once training is done, dropout is removed, and the network uses its full structure. Although randomness is present during training, the final output remains stable.

Stochastic strategies also play a crucial role in reinforcement learning. Here, agents learn by interacting with an environment. If the agent always chose the same actions, it might never discover better paths. By making action selection probabilistic, the model can explore a wider range of options. This often leads to more successful long-term strategies.

Some models are stochastic by design. Probabilistic models use randomness to reflect uncertainty. Bayesian models, for example, represent parameters as distributions instead of fixed values. This allows them to express confidence levels in their predictions. In tasks like medical diagnostics, knowing how uncertain a prediction is can be as useful as the prediction itself.

Generative models rely heavily on stochastic principles. Variational Autoencoders (VAEs), for instance, use random sampling from a learned distribution to create new data points. This makes them useful for tasks like image generation or style transfer. The randomness here helps the model produce diverse and creative outputs, even from a single set of training data.

GANs (Generative Adversarial Networks) also use stochastic sampling during training. The generator model starts from random noise and learns how to create realistic data. This randomness allows GANs to produce varied outputs and not just memorize the training data. The process works because it blends exploration with structured learning.

Stochastic methods even help models generate natural-sounding language. In tasks like text generation or translation, randomness during inference (like top-k sampling) helps avoid repetitive or robotic outputs. Instead of always choosing the highest-probability word, the model samples from a set of likely options, making the language feel more fluid.

Stochastic behavior isn’t just a training trick—it helps make models useful in real scenarios. In fraud detection, attackers often change strategies. A rigid, deterministic system might miss new patterns. A stochastic one can adapt and learn from evolving data. It reacts to change rather than just matching past examples.

In robotics, models often deal with uncertain sensor data and unpredictable environments. Stochastic models help by allowing the robot to test different actions. Instead of always following the same path, the robot explores and adjusts in real-time. This flexibility is key for operating in the physical world.

Recommendation systems use randomness, too. If they always suggested the same items, users might miss better or newer choices. Introducing stochasticity in recommendations can uncover preferences deterministic systems might ignore. This leads to more useful and engaging suggestions.

Healthcare models benefit from probabilistic thinking as well. Diagnoses often involve uncertain or incomplete data. A model that expresses levels of confidence—rather than binary outcomes—is more trustworthy. It gives doctors and users more context for decisions.

The same applies to finance. Risk models relying only on past data might fail under new conditions. Stochastic models that factor in uncertainty can better predict outcomes in volatile markets. They reflect the reality that outcomes are rarely fixed or predictable.

By using randomness, machine learning systems act less like static machines and more like adaptive tools. They learn, test, and refine rather than repeat fixed routines. That adaptability makes them effective across many applications.

Stochastic processes play a key role in how machine learning models learn, adapt, and perform. By introducing controlled randomness, they avoid overfitting, improve generalization, and handle uncertainty more effectively. Whether through training methods like SGD or probabilistic models that express confidence, stochastic elements help systems become more flexible and accurate. Rather than forcing predictable outcomes, they reflect the way real decisions work—through exploration, variation, and learning from uncertainty. This makes them more reliable, not less.

Advertisement

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

How the LiveCodeBench leaderboard offers a transparent, contamination-free way to evaluate code LLMs through real-world tasks and reasoning-focused benchmarks

How stochastic in machine learning improves model performance through randomness, optimization, and generalization for real-world applications

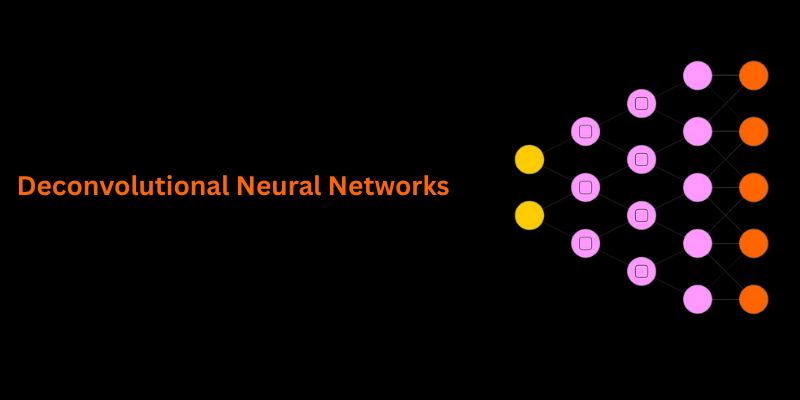

Understand how deconvolutional neural networks work, their roles in AI image processing, and why they matter in deep learning

How LLM evaluation is evolving through the 3C3H approach and the AraGen benchmark. Discover why cultural context and deeper reasoning now matter more than ever in assessing AI language models

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

Language modeling helps computers understand and learn human language. It is used in text generation and machine translation

AI interference lets the machine learning models make conclusions efficiently from the new data they have never seen before

What the Adam optimizer is, how it works, and why it’s the preferred adaptive learning rate optimizer in deep learning. Get a clear breakdown of its mechanics and use cases

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity