Advertisement

In everyday language, inference means concluding based on facts or clues. In artificial intelligence, AI inference is a process where the trained machine learning models recognize the patterns and draw conclusions from brand-new data. Inference is a fast process of using what the AI has learned to make predictions or decisions.

Understanding how AI inference works is important to understand AI systems. Therefore, today we will discuss what AI inference is, its major types, benefits, and more. So, don't stop here; keep reading to learn every little detail about interference AI!

AI inference is when an AI system uses what it has learned to understand new information. First, the AI is trained. During training, the AI model learns by looking at many examples. Completing this process also takes time, data, and powerful computers. After seeing examples, AI learns how to recognize details. Once trained, the AI can look at new, random pictures and guess the car’s make and model. Likewise, if an AI was trained to recognize different types of fruits, AI inference would help identify a new fruit image. It is called inference, using past learning to make a new guess.

AI inference works very fast and can be used in many places. For example, it can help match license plates to car types at toll booths or border checks. It’s also used in healthcare, banking, shopping, and more. In short, AI inference helps computers make smart decisions, just like humans do, but often much faster. But remember that AI inference is not the same as AI training.

AI inference follows some important steps to work well. Let's understand the workings of AI interference in detail here:

AI inference is how an AI system makes decisions or predictions after training. There are different types of inference, each useful in different situations. Let's discuss them below.

AI inference has many benefits when it is trained on the right data. Let’s discuss them below:

AI inference is a key part of how artificial intelligence works after being trained on large amounts of data. AI uses inference to make smart decisions and predictions about new information. It helps AI systems work in real-time across many areas like healthcare, banking, self-driving cars, etc. While training teaches the AI what to look for, inference is how it applies that knowledge in the real world. As AI technology grows, inference will become even faster, smarter, and more useful daily.

Advertisement

Explore how π0 and π0-FAST use vision-language-action models to simplify general robot control, making robots more responsive, adaptable, and easier to work with across various tasks and environments

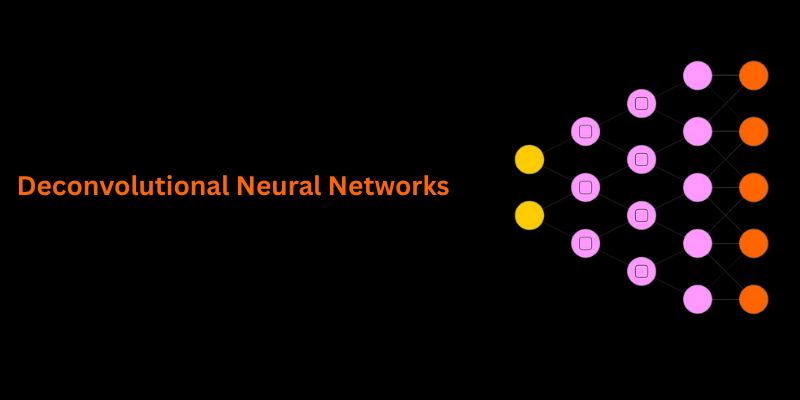

Understand how deconvolutional neural networks work, their roles in AI image processing, and why they matter in deep learning

How AI-powered earthquake forecasting is improving response times and enhancing seismic preparedness. Learn how machine learning is transforming earthquake prediction technology across the globe

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

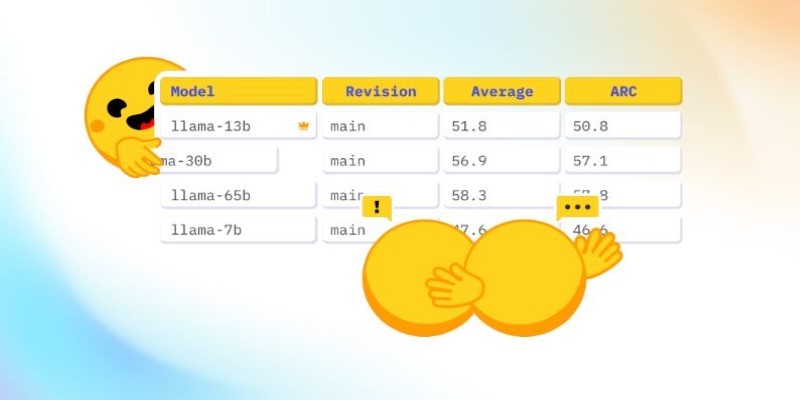

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

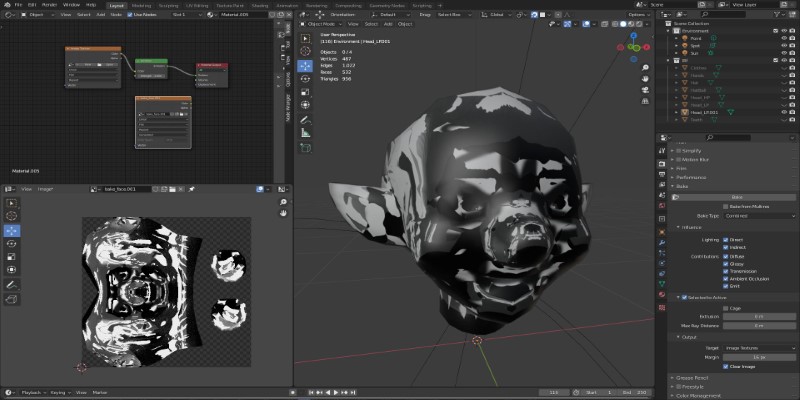

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

Access to data doesn’t guarantee better decisions—culture does. Here’s why building a strong data culture matters and how organizations can start doing it the right way

Looking for the best podcasts about generative AI? Here are ten shows that explain the tech, explore real-world uses, and keep you informed—whether you're a beginner or deep in the field

How temporal graphs in data science reveal patterns across time. This guide explains how to model, store, and analyze time-based relationships using temporal graphs

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

Looking to master SQL concepts in 2025? Explore these 10 carefully selected books designed for all levels, with clear guidance and real-world examples to sharpen your SQL skills

A simple and clear explanation of what cognitive computing is, how it mimics human thought processes, and where it’s being used today — from healthcare to finance and customer service