Advertisement

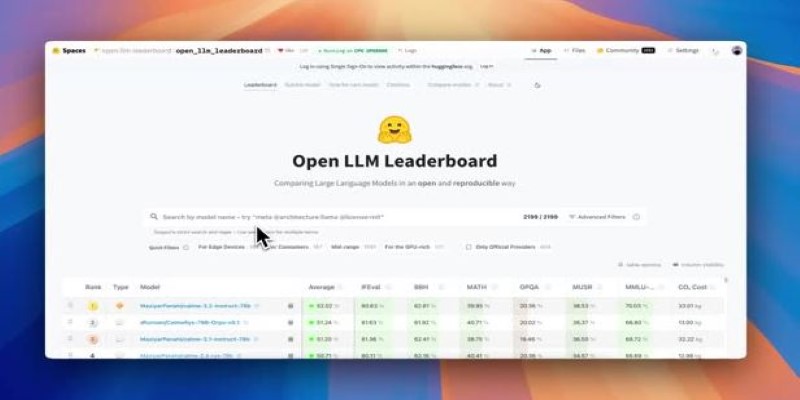

The growth of open-source language models has led to fierce competition and rapid development, with public leaderboards becoming the standard way to compare results. These leaderboards, which are often used to measure a model's abilities, rely on fixed benchmarks and numerical scores. However, there is a growing problem: high scores don't always indicate that a model is reasoning well. Instead, some models are built to pass tests rather than solve problems thoughtfully.

This disconnect is pushing researchers to rethink how models are evaluated. Math-Verify offers a practical shift. It measures a model’s ability to solve problems step-by-step, revealing whether it can actually reason rather than just guess the correct answer.

Most open-source LLM leaderboards focus on tasks like summarization, text generation, and question answering. These are useful tests, but they often judge models by how well their output matches a reference rather than whether the response makes sense. Some models perform well by generating answers that look right, even if the logic behind them is missing. This creates a system where surface-level performance is rewarded more than actual problem-solving ability.

Another concern is how easily these benchmarks can be optimized. Developers can train models on the structure and content of the tests themselves, inflating scores without improving the underlying reasoning. This has made it difficult to distinguish which models genuinely comprehend complex problems from those that are merely adept at mimicking patterns. Leaderboards become less about measuring capability and more about gaming the format.

Math-Verify changes how we evaluate language models by focusing on their reasoning process. Instead of checking if the final answer is correct, it examines each step a model takes to arrive at it. This kind of testing is especially useful in math-related tasks, where the method often matters more than the result. A model might arrive at a wrong answer but still demonstrate strong reasoning through valid steps — and that's useful feedback.

With Math-Verify, models are encouraged to show their full working process. These steps are then verified for mathematical consistency and logical accuracy. This adds a level of transparency that most benchmarks lack. The approach works well in tasks involving arithmetic, algebra, geometry, and word problems. It doesn't just test whether the model can say the right thing but also whether it can explain and justify it.

This framework also helps identify errors more clearly. When a model’s output is broken into steps, it’s easier to pinpoint where the reasoning falls apart. That makes debugging and comparison more informative. Instead of giving vague scores, Math-Verify shows how and where a model is strong — or where it starts to break.

To bring Math-Verify into the leaderboard space, we need to introduce tasks that are based on reasoning, not just output similarity. This means presenting math problems that require structured, multi-step solutions. Models would be asked to generate explanations and intermediate steps, all of which are assessed for correctness and logic, either manually or using a symbolic checker.

This format doesn't replace traditional benchmarks, but it adds a layer that reveals more about how a model handles real challenges. A high-performing model under Math-Verify would be one that not only gets the answer right but also demonstrates how it solved the problem in a meaningful way. That's harder to fake and more valuable for users who depend on accurate, transparent results.

The impact on model development would be immediate. Developers would need to shift focus from just fine-tuning for specific benchmarks to improving the model’s ability to reason. That includes training models to understand math concepts, break down problems, and recognize mistakes — skills that go beyond memorizing formats or patterns.

By making step-based reasoning part of a leaderboard score, we create a system where thoughtful problem-solving is visible and rewarded. It’s not about punishing models that make small mistakes but about encouraging more grounded and explainable outputs. This helps both researchers and users choose models for tasks that require reliability, not just fluency.

Adopting Math-Verify in leaderboards is a step toward honest and realistic model evaluation. It helps create a clear distinction between models that understand what they're doing and those that generate passable answers without solid reasoning. When a clear trail of logic supports every answer, it becomes easier to assess quality.

This change supports more practical use cases, particularly in areas such as education, science, and engineering, where the steps and logic are as important as the final output. It also gives developers more insight into how their models function. Instead of relying on opaque scores, they can track how models think and refine them based on actual reasoning performance.

More importantly, this type of testing encourages the development of models that are not only accurate but also reliable and explainable. In an open-source ecosystem where transparency is already valued, Math-Verify fits well. It's not about making things harder for developers — it's about making evaluations more meaningful.

By shifting attention to how models solve problems, not just whether they get the final answer right, Math-Verify brings balance back to the process of ranking LLMs. It makes scores more trustworthy, results more interpretable, and progress more grounded in real capabilities.

Leaderboards have become a major part of open LLM development, but they’re not always reliable indicators of reasoning strength. Many models learn to score well without actually understanding the problems they're solving. Math-Verify offers a fix. Asking models to explain their steps and verifying the logic behind them creates a deeper, more honest picture of performance. This doesn't just make scores more useful — it helps build models that are smarter in ways that matter. If we want better tools, we need better tests. Math-Verify brings us closer to evaluations that reflect real skills, not just good guesses.

Advertisement

How One Hot Encoding converts text-based categories into numerical data for machine learning. Understand its role, benefits, and how it handles categorical variables

How Open-source DeepResearch is reshaping the way AI search agents are built and used, giving individuals and communities control over their digital research tools

How stochastic in machine learning improves model performance through randomness, optimization, and generalization for real-world applications

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

Gradio's new data frame brings real-time editing, better data type support, and smoother performance to interactive AI demos. See how this structured data component improves user experience and speeds up prototyping

Access to data doesn’t guarantee better decisions—culture does. Here’s why building a strong data culture matters and how organizations can start doing it the right way

Natural language generation is a type of AI which helps the computer turn data, patterns, or facts into written or spoken words

Looking to master SQL concepts in 2025? Explore these 10 carefully selected books designed for all levels, with clear guidance and real-world examples to sharpen your SQL skills

AGI is a hypothetical AI system that can understand complex problems and aims to achieve cognitive abilities like humans

Looking for the best podcasts about generative AI? Here are ten shows that explain the tech, explore real-world uses, and keep you informed—whether you're a beginner or deep in the field