Advertisement

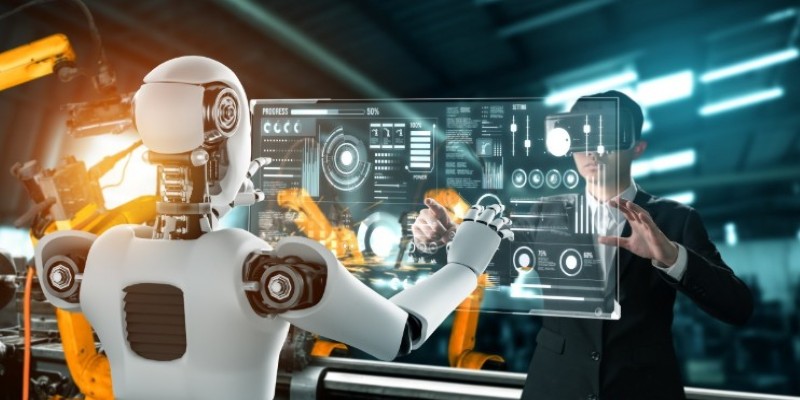

Artificial general intelligence describes a machine's hypothetical intelligence to understand, learn, or interpret tasks or things the same way human beings do intellectually. In other words, it's a type of artificial intelligence that can mimic human brain cognitive abilities, create software tools that possess human-like intelligence, and self-teach themselves when needed.

Although it differs from artificial intelligence in terms of cognitive abilities, its purpose remains the same: producing results close to human intelligence. From revolutionizing the industries to self-driving cars and IBM’s Watson, AGI promises groundbreaking advancements. We have got you covered if you want to know more about AGI or how it differs from AI. So, keep reading and learn everything in detail here!

The artificial intelligence we have experienced generally performs functions based on predetermined parameters, like image creation, website builders, etc. Each of these cannot perform a function outside of its defined parameters like a website builder can't do image creation. It is where artificial general intelligence kicks in. AGI (artificial general intelligence) is a hypothetical AI system with autonomous control, learning new skills, and understanding the situations, apart from and above complex problems and settings fed at its creation time. Other core characteristics that differentiate AGI from other forms of AI are:

Due to its unconventional thinking, AGI is not limited to a single field; its pursuit involves interdisciplinary collaboration between fields like computer science, neuroscience, and cognitive psychology. As advancements in these fields are shaping the future of AGI, it remains largely a concept that compels researchers and engineers to make it a reality.

Artificial intelligence is a computer science offshoot that enables software to perform and achieve different tasks with human-level performance. On the other hand, artificial general intelligence solves problems at different levels without manual human intervention. It's not limited to a specific scope; it can teach itself to solve and perform tasks it was never trained for.

However, some scientists and researchers believe that AGI is still a hypothetical approach to using human cognitive abilities. They emphasize AI systems handling tasks without additional training. Contrary to their beliefs, AI systems of today require a substantial amount of training before performing a special task within their domain. A good example is medical chatbots, which require a large language model with medical datasets to perform their functions.

Due to its broadness, AGI requires a spectrum of data, technologies, and interconnectivity to drive AI models and mimic human cognitive behavior, creativity, perception, memory, and learning. Following are some methods proposed by experts to drive AGI research:

Although AGI seems a distant goal for researchers to achieve still, efforts are ongoing and encouraged for its emerging developments; here are some emerging technologies:

A few examples of AGI which are already present in the AI systems include:

Over the decades, AI researchers have sought to mimic human intelligence in performing different tasks using advanced machine intelligence. They have succeeded to some extent, and that's why we have AGI today. Artificial General Intelligence (AGI) is a theoretical understanding of how a machine learns, understands, and performs different tasks, replicating human intelligence. It mimics the human cognitive abilities to think, perceive, and perform. It differs from today's AI and excels in specific tasks like driving cars. It aims to achieve human-like cognition. Although it is not fully developed yet, its applications in different forms of artificial intelligence are visible.

Advertisement

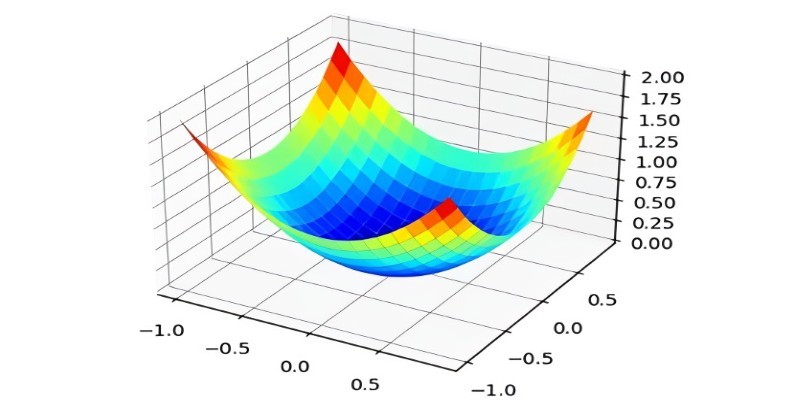

What the Adam optimizer is, how it works, and why it’s the preferred adaptive learning rate optimizer in deep learning. Get a clear breakdown of its mechanics and use cases

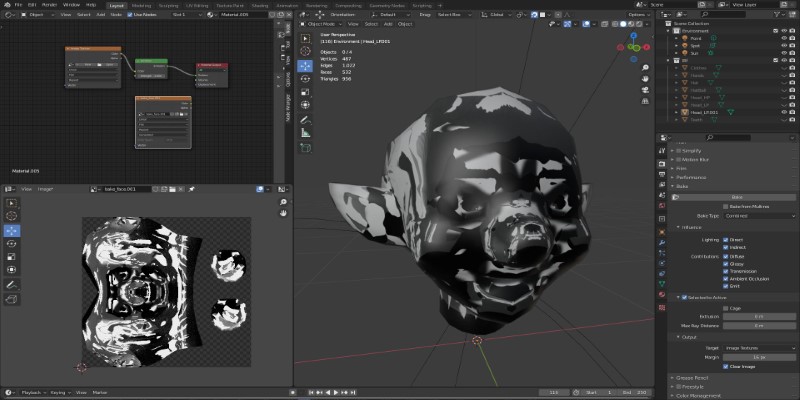

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

A simple and clear explanation of what cognitive computing is, how it mimics human thought processes, and where it’s being used today — from healthcare to finance and customer service

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

How Monster API simplifies open source model tuning and deployment, offering a faster, more efficient path from training to production without heavy infrastructure work

Gradio's new data frame brings real-time editing, better data type support, and smoother performance to interactive AI demos. See how this structured data component improves user experience and speeds up prototyping

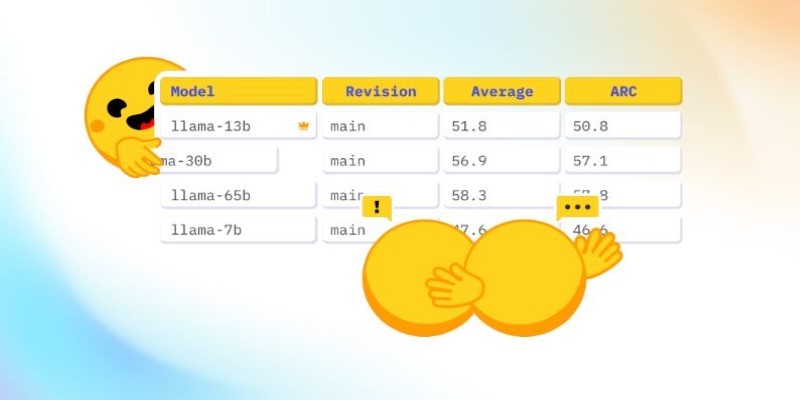

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

Explore how π0 and π0-FAST use vision-language-action models to simplify general robot control, making robots more responsive, adaptable, and easier to work with across various tasks and environments

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

How One Hot Encoding converts text-based categories into numerical data for machine learning. Understand its role, benefits, and how it handles categorical variables

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

Natural language generation is a type of AI which helps the computer turn data, patterns, or facts into written or spoken words