Advertisement

When training machine learning models, especially deep networks, choosing the right optimizer makes a difference. One of the most widely used is Adam. It’s not just popular—it’s practical. Developers and researchers turn to Adam because it tends to work well without much tuning. It adapts during training, handles sparse gradients, and performs steadily across many tasks.

While the name might seem technical, Adam blends two existing methods—Momentum and RMSProp—into something smarter. In this article, we'll break down how Adam works, why it's widely used, and where it fits in today's machine-learning workflows.

Adam, short for Adaptive Moment Estimation, updates neural network weights using gradient information in a smarter way. It tracks two moving averages: one for the gradients (first moment) and one for their squares (second moment). These averages help Adam adjust the step size during training.

This technique combines ideas from Momentum, which smooths updates using past gradients, and Respro, which adapts learning rates based on gradient magnitude. By blending both, Adam adapts to the structure of the loss surface more efficiently than basic stochastic gradient descent (SGD).

The learning rate in Adam is dynamic. Instead of applying a single learning rate across all weights, Adam assigns a tailored learning rate to each parameter. This is useful when gradients vary across weights, as in many deep models.

Early in training, the moving averages can be biased toward zero. Adam corrects this bias by scaling the estimates, especially in the initial steps. These corrections prevent instability and improve the reliability of the updates.

Because of its structure, Adam handles noisy or sparse data better than many traditional optimizers. It adjusts quickly to changing gradients and helps stabilize training in deep learning, where gradients can easily explode or vanish.

How Adam Became the Go-To Optimizer?

Adam became popular because it's both effective and easy to use. Unlike SGD, which often requires careful learning rate tuning and decay schedules, Adam usually performs well with default settings. This makes it appealing to a wide range of models.

It's especially useful in natural language processing and computer vision, where models have millions or even billions of parameters. With such a scale, tuning learning rates manually becomes difficult. Adam handles this by adapting automatically, allowing developers to get good results faster.

Another major reason for Adam’s popularity is its performance on sparse data. In many NLP tasks, not every parameter gets updated in each batch. Adam keeps track of individual update histories, which helps when some weights are updated more frequently than others.

That said, Adam isn’t perfect. In some cases, SGD with momentum may yield better generalization. Researchers have seen this in large-scale vision tasks or fine-tuning pre-trained models. But for most use cases—especially when training from scratch—Adam gives solid results with minimal fuss.

Its default settings (learning rate of 0.001, beta1=0.9, beta2=0.999, epsilon=1e-8) often work well across problems, which lowers the barrier to entry for beginners and helps experienced users get up and running quickly.

Adam’s behavior is shaped by a few key hyperparameters. The most well-known is the learning rate. Though 0.001 is standard, some models benefit from values like 0.0001 or 0.01, depending on how stable or aggressive the updates need to be.

Beta1 and beta2 control the decay rates for the moving averages of the gradients and their squares. Beta1 governs how much past gradients affect the current update, while beta2 does the same for squared gradients. High values (close to 1) smooth the averages more, making updates slower to react.

Epsilon is a small constant that prevents division by zero when normalizing the gradients. It usually stays at 1e-8, but in cases of unstable training or exploding gradients, adjusting epsilon can help.

Adam is generally forgiving with these hyperparameters, which adds to its appeal. Still, understanding their roles can help when fine-tuning is necessary. For example, reducing beta1 might help models that deal with fast-changing data or where gradients shift frequently.

A newer variant, AdamW, separates weight decay from the gradient-based update. In standard Adam, weight decay is coupled with the adaptive steps, which can affect generalization. AdamW fixes this by applying weight decay directly, leading to better performance in some tasks, especially in transformers and language models.

Adam has become a default choice across deep learning frameworks like PyTorch, TensorFlow, and JAX. Its consistent performance makes it suitable for a wide range of tasks—image recognition, text generation, audio synthesis, and more.

It's often the optimizer of choice when pretraining large models. Transformers like BERT, GPT, and T5 all use Adam or one of its variants during the initial training phase. This shows how deeply integrated Adam is in modern machine learning.

Despite its popularity, Adam isn’t always the best choice. In resource-constrained environments, simpler optimizers like SGD may be more efficient. Some research shows SGD with learning rate schedules can outperform Adam in generalization on certain benchmarks. But these cases usually involve models that are already tuned extensively.

New optimizers continue to appear, each trying to offer better convergence, faster training, or improved generalization. Still, Adam remains the go-to for many projects. Its adaptability, ease of use, and wide support keep it relevant even as the landscape evolves.

For developers building production models or experimenting with prototypes, Adam offers a balance between reliability and performance. Whether you're building a small recommendation engine or training a foundation model, Adam is often a solid starting point.

Adam Optimizer simplifies the training process while delivering strong, reliable performance across a wide range of models. Its ability to adapt learning rates per parameter, track gradients, and handle noisy updates makes it a strong fit for deep learning. Unlike older methods, it doesn’t require constant tweaking or elaborate schedules to work well. That’s why it’s so widely used—from research labs to production systems. While alternatives like AdamW and SGD may shine in specific cases, Adam's mix of flexibility and simplicity keeps it at the center of most machine-learning workflows. It's not perfect, but it gets the job done—well and often.

Advertisement

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

How LLM evaluation is evolving through the 3C3H approach and the AraGen benchmark. Discover why cultural context and deeper reasoning now matter more than ever in assessing AI language models

Explore the Rabbit R1, a groundbreaking AI device that simplifies daily tasks by acting on your behalf. Learn how this AI assistant device changes how we interact with technology

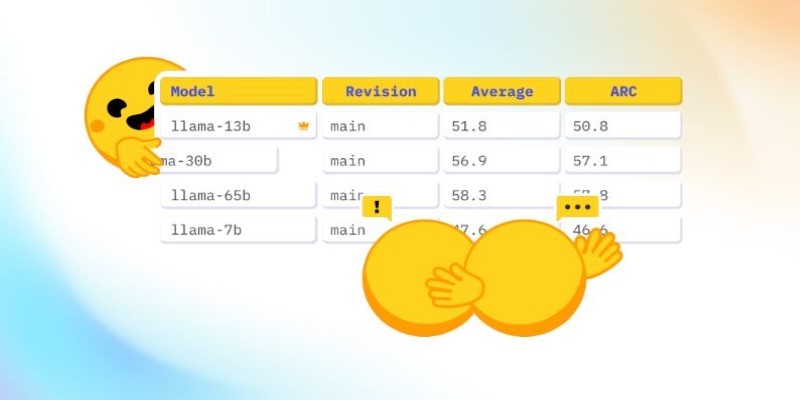

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

How Voronoi diagrams help with spatial partitioning in fields like geography, data science, and telecommunications. Learn how they divide space by distance and why they're so widely used

What data annotation is, why it matters in machine learning, and how it works across tools, types, and formats. A clear look at real-world uses and common challenges

Language modeling helps computers understand and learn human language. It is used in text generation and machine translation

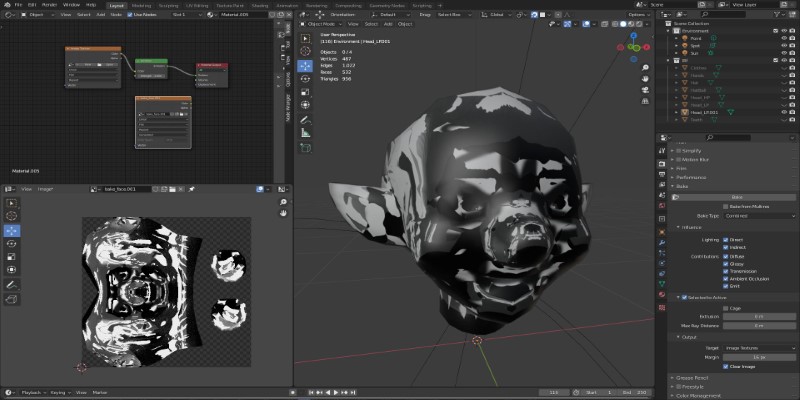

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

A simple and clear explanation of what cognitive computing is, how it mimics human thought processes, and where it’s being used today — from healthcare to finance and customer service

How Krutrim became India’s first billion dollar AI startup by building AI tools that speak Indian languages. Learn how its large language model is reshaping access and inclusion