Advertisement

From predictive text on the phone to voice assistants and chatbots, language modeling assures a seamless interaction between technology and humans. But what is language modeling, and how does it work? Language modeling is a method that helps computers understand, predict, and learn human language. It works by learning patterns in how words are used in sentences.

Language modeling is beneficial in many fields, particularly Natural Language Processing (NLP). Therefore, whether you are a tech enthusiast or curious to know how machines understand language, understanding machine modeling is crucial. So, sit back, sip your coffee, and read until the end to understand machine modeling, its workings, uses, and more in detail here!

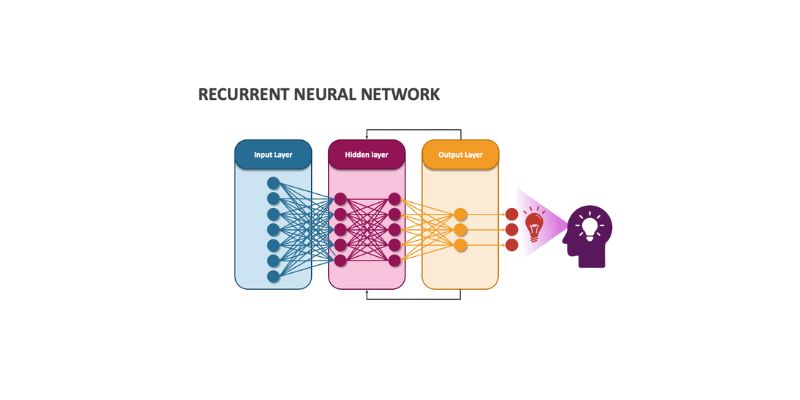

Language modeling is a method for helping computers understand and use human language. It works by looking at the words that come before and guessing what word can come next. It helps the computer understand how sentences are formed and what the sentences or words mean. Computer programs are used to make it work. These special types of computer programs are called deep learning models. Some popular ones are RNNs and Transformers. These models are trained.

A lot of text, like books, articles, or websites, is used for training. While training, the model learns patterns in the text. It studies how words are used together and what usually follows certain words. With time, the model gets better at predicting the next word. Language modeling is very helpful in many areas of technology. For example, it helps translate one language to another. It is best for understanding feelings in a text, and it is also used in speech-to-text systems. Language modeling is also used in smart assistants like Siri or Alexa. It also helps businesses understand customer messages, reviews, and more.

Language models are tools that help computers understand human language. There are two main types, which have different ways of learning how words are used in sentences. Let’s discuss them below:

Some important tools and ideas are often used with language modeling. Let’s discuss them below:

Language modeling is used in many valuable ways. Here are some of its most important use cases.

Language modeling is a powerful tool that helps computers understand and work with human language. There are two main types of language models: statistical and neural. Statistical models are simple and fast but cannot handle long sentences well. Neural models, on the other hand, are more advanced and can understand complex language better. Technologies like transformers, RNNs, and word embeddings strengthen language modeling. We use language modeling every day without even realizing it. It is used in voice assistants, translating languages, or chatting with bots. As technology improves, Language modeling will become more useful in many fields.

Advertisement

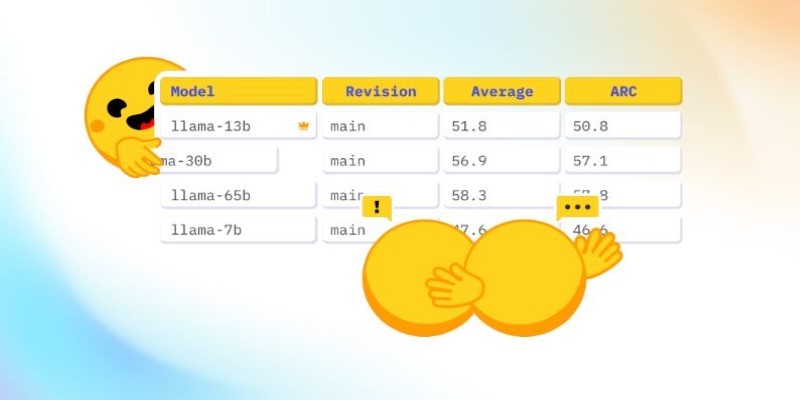

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

How Voronoi diagrams help with spatial partitioning in fields like geography, data science, and telecommunications. Learn how they divide space by distance and why they're so widely used

How Monster API simplifies open source model tuning and deployment, offering a faster, more efficient path from training to production without heavy infrastructure work

How Open-source DeepResearch is reshaping the way AI search agents are built and used, giving individuals and communities control over their digital research tools

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

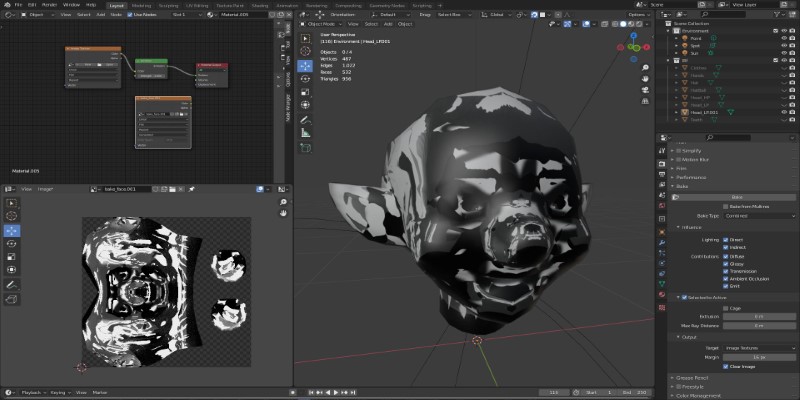

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

Natural language generation is a type of AI which helps the computer turn data, patterns, or facts into written or spoken words

Looking to master SQL concepts in 2025? Explore these 10 carefully selected books designed for all levels, with clear guidance and real-world examples to sharpen your SQL skills

How One Hot Encoding converts text-based categories into numerical data for machine learning. Understand its role, benefits, and how it handles categorical variables

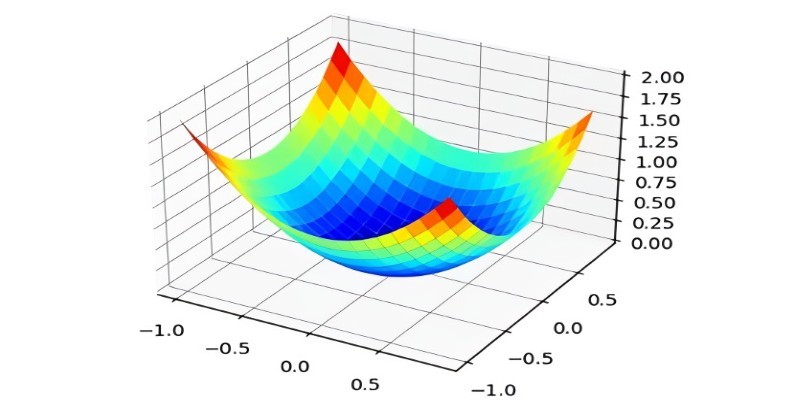

What the Adam optimizer is, how it works, and why it’s the preferred adaptive learning rate optimizer in deep learning. Get a clear breakdown of its mechanics and use cases