Advertisement

Arabic is one of the most widely spoken languages in the world, yet when it comes to natural language processing (NLP), it often lags due to its complex structure and underrepresentation in research. This gap has led to less accurate tools for Arabic speakers and limited progress in building effective language models. Now, things are starting to shift. With growing attention to language inclusivity and regional representation, the field is witnessing several new efforts to improve Arabic NLP.

These include developing instruction-following datasets, major upgrades to Arabic language generation tools, and the launch of dedicated leaderboards to evaluate progress. The momentum around Arabic language AI is real, and it's starting to show results.

Most modern language models, especially those built for English, have largely grown successful because they've been trained to follow instructions. Users can ask these models to explain concepts, summarize text, translate, or write in a certain tone. This behavior, known as instruction-following, doesn’t come naturally to all models—it has to be trained using high-quality data.

Arabic instruction-following has historically lacked the kind of attention English, and a few other languages have received. Recently, new projects have been tackling this issue directly. Researchers have started building instruction-tuned datasets in Arabic, manually curating prompts and responses that help train models to respond more naturally and effectively to user input in Arabic. These datasets include a mix of short prompts, detailed questions, and multi-turn conversations, reflecting a range of practical uses—from everyday queries to more nuanced discussions.

What’s important here is the cultural and linguistic nuance. Arabic isn't just one dialect—it’s a group of dialects with different rules and vocabularies depending on the region. MSA (Modern Standard Arabic) may be the common ground in written form, but spoken dialects vary greatly. The developed datasets aim to account for this, offering examples across multiple dialects while grounding most data in MSA for consistency.

The result is improved accuracy and more relevant and natural interactions for Arabic-speaking users. These instruction-following capabilities make AI systems more useful for education, content creation, or general assistance.

Another key development has been the update to AraGen, a language model fine-tuned specifically for Arabic text generation. AraGen has been around for a while, but earlier versions struggled with fluency, coherence, and depth—issues tied to both the limited training data and Arabic's structural complexity.

The latest updates to AraGen reflect a major shift in how Arabic data is sourced and used. Instead of relying on scraped data with uneven quality, the new AraGen uses a cleaner and more diverse corpus that includes books, formal writings, articles, and filtered web content. This helps the model produce responses that are not only grammatically accurate but contextually sound. The fine-tuning process has also incorporated feedback loops, where model outputs are evaluated and refined iteratively by human annotators, many of whom are native Arabic speakers.

What makes the updated AraGen more promising is how well it handles multi-turn dialogue and task-based instructions. Whether the model summarizes an article, offers a translation, or answers a technical question, it now shows more depth and flexibility. In real-world scenarios, this means more productive AI interactions in Arabic without users needing to switch to English or compromise clarity.

One of the most meaningful additions to Arabic NLP is the introduction of public leaderboards that track the performance of language models on Arabic-specific tasks. Leaderboards have played a huge role in English NLP by providing benchmarks, motivating improvements, and encouraging fair competition. Applying this approach to Arabic is long overdue.

These new leaderboards include a variety of evaluation tasks, such as reading comprehension, summarization, sentiment classification, and instruction following—all done in Arabic. The data used in these evaluations is manually vetted to ensure it reflects realistic scenarios and diverse forms of the language. Importantly, these benchmarks are not just for Modern Standard Arabic; some include regional dialects to accurately reflect real-world usage.

By making performance data public, the leaderboards help researchers see where current models fall short and where they excel. It also creates a space for collaboration, as researchers can submit their models and compare results using standardized metrics. Over time, this visibility will push developers to build more accurate and fair models, ensuring that improvements in Arabic NLP are grounded in measurable progress rather than claims.

The leaderboards also include human evaluations for certain tasks. This is particularly helpful in Arabic, where many automated metrics don't fully capture meaning or context. Human raters score outputs based on fluency, relevance, and factual accuracy, adding a much-needed depth to the evaluation process.

While these updates are promising, some challenges remain. One is dialect diversity. Although recent efforts include dialectal examples, most work still leans toward MSA. For Arabic NLP to serve all speakers, models must better understand and generate various dialects, from Egyptian to Levantine to Gulf Arabic.

Another challenge is the limited availability of high-quality open-source data. Many datasets remain proprietary or behind paywalls, restricting who can contribute. More open datasets will help broaden participation, especially from underrepresented researchers and communities.

There's also the issue of tool integration. A model may perform well on a leaderboard but still be hard to use daily. Better developer tools, plug-ins, and APIs are needed to bring these models into real-world platforms like mobile apps and education tools.

Despite these hurdles, progress feels different. There's momentum, community effort, and a better understanding of what quality means in Arabic NLP. Whether a student in Morocco uses a chatbot or a researcher in Jordan builds an app with AraGen, these developments are starting to make a real difference.

Arabic NLP is advancing with better instruction-following models, an upgraded AraGen, and transparent leaderboards. These efforts are shaping more accurate, accessible tools for native speakers. As research continues and data improves, users can expect AI that better reflects the language’s depth and diversity. This progress marks a meaningful step toward more inclusive and reliable Arabic-language technology.

Advertisement

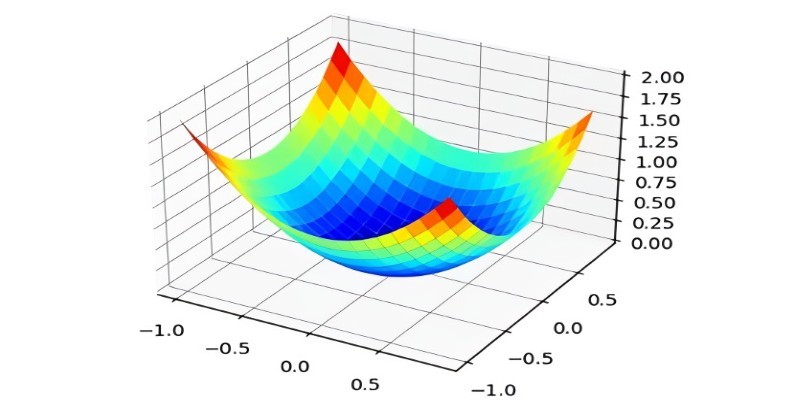

What the Adam optimizer is, how it works, and why it’s the preferred adaptive learning rate optimizer in deep learning. Get a clear breakdown of its mechanics and use cases

AGI is a hypothetical AI system that can understand complex problems and aims to achieve cognitive abilities like humans

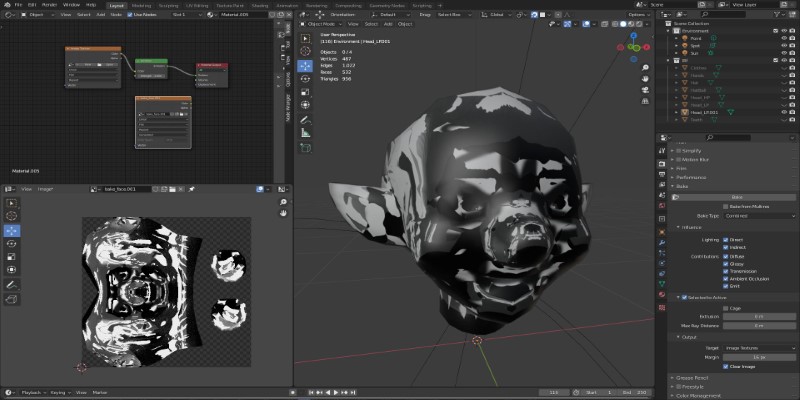

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

Hugging Face and JFrog tackle AI security by integrating tools that scan, verify, and document models. Their partnership brings more visibility and safety to open-source AI development

Language modeling helps computers understand and learn human language. It is used in text generation and machine translation

How the LiveCodeBench leaderboard offers a transparent, contamination-free way to evaluate code LLMs through real-world tasks and reasoning-focused benchmarks

Understand how deconvolutional neural networks work, their roles in AI image processing, and why they matter in deep learning

How Krutrim became India’s first billion dollar AI startup by building AI tools that speak Indian languages. Learn how its large language model is reshaping access and inclusion

AI interference lets the machine learning models make conclusions efficiently from the new data they have never seen before

How AI-powered earthquake forecasting is improving response times and enhancing seismic preparedness. Learn how machine learning is transforming earthquake prediction technology across the globe

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers