Advertisement

Evaluating large language models built for coding has never been straightforward. Most benchmarks either focus too narrowly on specific tasks or rely on datasets that these models have already seen, creating inflated results that don’t reflect real-world capability. This is where LiveCodeBench steps in.

Unlike previous evaluation methods, LiveCodeBench brings a fresh approach by emphasizing contamination-free data and realistic testing scenarios. It’s built to assess models in a way that more closely mirrors how developers actually use them. It doesn’t just rank; it reveals what these models can really do, cleanly and honestly.

LiveCodeBench was created to address two key issues in the evaluation of code-generating models: task diversity and dataset contamination. Traditional benchmarks tend to focus on static, small sets of problems, many of which are either too simplistic or have been directly scraped from popular coding platforms. As a result, language models trained on these same sources often show results that are artificially high. In contrast, LiveCodeBench carefully curates its evaluation set to avoid overlap with common training datasets, reducing leakage and giving a clearer view of how well a model generalizes.

Another distinction lies in its coverage of problems. LiveCodeBench pulls from a wide range of coding challenges that test everything from algorithmic problem-solving to real-world API use, database operations, and language-specific syntax. It's not just about solving LeetCode-style puzzles. It's about building software, debugging logic, and managing complexity — the tasks developers face on a daily basis. This richer diversity ensures that a model’s score reflects true problem-solving depth.

Additionally, the platform evaluates not just final code outputs but also intermediate reasoning. Many large language models can produce working code by chance or through pattern repetition. LiveCodeBench looks at the thought process, assessing whether a model breaks a problem into meaningful steps or just spits out memorized templates. This level of granularity in evaluation is rarely seen in prior benchmarks.

One of the biggest silent problems in model evaluation is contamination. When a model is trained on data that later appears in its test set, the evaluation becomes meaningless. It’s not intelligence at that point — it’s recall. LiveCodeBench goes to great lengths to prevent this. Each benchmark task is selected based on its absence from common training corpora. This includes datasets used in widely recognised benchmarks, such as HumanEval, MBPP, or CodeContests. By filtering out overlapping content, LiveCodeBench ensures that performance reflects a model’s reasoning and not just its memory.

The development team behind LiveCodeBench uses version-controlled problem sets, keeps evaluation questions under wraps until assessment, and regularly rotates the dataset to maintain freshness. There’s also a public policy that lists known contaminated sources, offering transparency for both researchers and users.

The commitment to zero-leakage makes LiveCodeBench more trustworthy than older standards, especially when comparing newer models that may have been trained on broader internet-scale datasets. For developers, researchers, and industry users, this matters. It's the difference between knowing a model can solve a problem because it understands the logic versus having just seen the answer during training.

LiveCodeBench runs an automated evaluation system that tracks multiple layers of a model's behaviour. The core metric is task success rate — whether the model outputs a functionally correct solution to a given problem. But the leaderboard doesn't stop there. It introduces other criteria, such as reasoning trace quality, code readability, and runtime efficiency, when applicable.

Models are ranked using aggregated scores that balance correctness with reasoning depth and execution performance. A model that produces a working solution but fails to explain how it arrived at it might rank lower than one that shows clear, logical steps, even if it requires minor tweaks. This multifaceted grading encourages the development of language models that aren't just code generators but coding assistants — tools that can communicate, clarify, and collaborate.

Moreover, submissions to the leaderboard are publicly documented. Anyone evaluating a model on LiveCodeBench must submit details about the model architecture, training data sources (as much as possible), and inference setup. This prevents cherry-picking or fine-tuning specifically for the benchmark, a problem that plagued older code evaluation datasets.

LiveCodeBench also supports multiple programming languages. While Python remains dominant, JavaScript, Java, C++, and a few domain-specific languages are supported, allowing for a broader assessment of a model’s language understanding and syntax handling. This is especially useful for organizations looking to deploy models in diverse environments beyond the Python-centric ecosystem.

The rise of code-generating large language models has reshaped how developers write software. However, the usefulness of these tools depends heavily on how they're evaluated. A misleading benchmark can set back progress, inflate expectations, and even lead to the adoption of models that aren't truly capable.

LiveCodeBench pushes the field forward by setting a higher standard. Its focus on contamination-free testing, reasoning-aware evaluation, and multi-language support makes it one of the most reliable tools for judging a model’s real-world value. It’s not about being perfect — it’s about being honest, replicable, and useful. As models evolve, the benchmark will evolve with them, ensuring that evaluations keep pace with improvements and avoid giving models an easy pass.

LiveCodeBench is already influencing how new models are being trained and tuned. Developers now have an incentive to build models that reason more clearly, generalize better, and work beyond the Python-heavy environments. Researchers, meanwhile, gain a dependable yardstick that separates genuine improvements from performance tricks.

Whether you’re developing a new code model or comparing options for integration into your IDE or platform, LiveCodeBench gives you a solid ground to stand on. It doesn’t reward memorization or gimmicks. It rewards understanding.

LiveCodeBench changes the game for evaluating code LLMs. By staying contamination-free and focusing on deeper reasoning, it offers a more accurate and honest look at how these models perform in real-life coding situations. It’s not about chasing perfect scores — it’s about building models that can truly assist with real development work. As the benchmark continues to grow and evolve, it promises to keep setting the bar for what meaningful code generation should look like, helping the field stay grounded while moving forward.

Advertisement

AI interference lets the machine learning models make conclusions efficiently from the new data they have never seen before

How the LiveCodeBench leaderboard offers a transparent, contamination-free way to evaluate code LLMs through real-world tasks and reasoning-focused benchmarks

How Open-source DeepResearch is reshaping the way AI search agents are built and used, giving individuals and communities control over their digital research tools

Looking for the best podcasts about generative AI? Here are ten shows that explain the tech, explore real-world uses, and keep you informed—whether you're a beginner or deep in the field

How One Hot Encoding converts text-based categories into numerical data for machine learning. Understand its role, benefits, and how it handles categorical variables

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

How temporal graphs in data science reveal patterns across time. This guide explains how to model, store, and analyze time-based relationships using temporal graphs

What data annotation is, why it matters in machine learning, and how it works across tools, types, and formats. A clear look at real-world uses and common challenges

How stochastic in machine learning improves model performance through randomness, optimization, and generalization for real-world applications

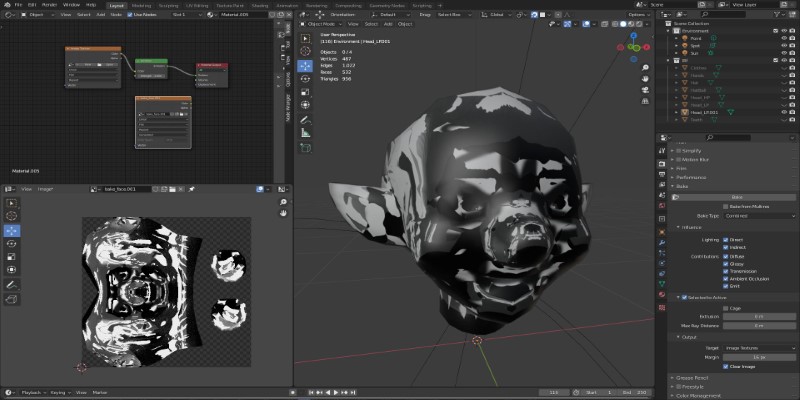

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

How Monster API simplifies open source model tuning and deployment, offering a faster, more efficient path from training to production without heavy infrastructure work

Natural language generation is a type of AI which helps the computer turn data, patterns, or facts into written or spoken words