Advertisement

Every smart device you use—whether a voice assistant, a recommendation engine, or facial recognition—relies on something most people never think about: labeled data. Machines don’t just “know” things; they must be shown what’s what. That’s the job of data annotation. Behind every accurate AI response is someone, somewhere, who tagged, marked, or categorized a piece of raw information. It’s not flashy, but it’s essential. Without it, machine learning models are blind. This quiet work builds the foundation for everything from medical diagnostics to self-driving cars. If AI is the engine, data annotation is the fuel that powers it.

Data annotation means labeling raw data so machines can recognize patterns. This labeling helps supervised machine learning models learn by example. For instance, a model trained to recognize cats in images needs lots of annotated pictures where the cats are clearly identified. Without those labels, the system wouldn't know what it's looking at.

Annotation spans many data types—text, images, video, and audio. In natural language processing, you might tag words with their emotion, part of speech, or named entities. In computer vision, you mark objects with boxes or detailed outlines. In audio, annotations might involve transcripts or marking background noise. It all depends on what the machine needs to learn.

The detail level of annotations varies, too. Some tasks are basic, like assigning a sentiment label. Others are complex, like marking every pixel of an image to show object boundaries. Regardless of how deep the labeling goes, the goal is always to provide clean, structured data that teaches machines how to make sense of new information.

There are a few major categories of data annotation, each linked to the input data type.

Image annotation is used in facial recognition, medical imaging, and self-driving vehicles. You might draw boxes around people, tag clothing items, or outline lane markers. The goal is to train systems to "see" like a person would.

Text annotation is common in chatbots, spam filters, and translation services. It involves tasks like sentiment tagging, labeling parts of speech, or identifying names and places in a sentence. In customer service systems, intent recognition helps bots understand what users want.

Audio annotation includes converting speech into text, identifying speakers, or marking emotional tone. It’s essential for applications like transcription services and voice assistants. The annotations may also include timestamps or background noise labels.

Video annotation is the most detailed form. Each object in a video may need to be tracked across multiple frames. This helps systems like surveillance tools or sports analysis platforms follow motion and change over time. Annotators draw shapes around objects and track how they move through the video.

Another specialized method is semantic segmentation, where every pixel is labeled. This method helps machines detect very specific areas within an image, and it's common in detailed image work like autonomous driving or medical scans.

There are three basic ways to annotate data: manually, semi-automatically, or automatically. Trained humans do manual annotation, which is generally the most accurate. However, it's also the slowest and most expensive. Crowdsourcing platforms are often used for this kind of work.

Semi-automatic annotation uses software to label data, which a person corrects or confirms. This can save time while still maintaining a good level of accuracy. It's often used in large-scale machine-learning tasks.

Fully automatic annotation is rare but growing. These systems rely on existing models to make initial labels. It works best when the task is simple or when a strong baseline model is available. The results usually still need review.

Various tools support this process. Some are open-source, like LabelImg for image work or Doccano for text. Others are commercial platforms offering project management, team collaboration, and built-in model training. Some are tailored to specific data types—for example, CVAT focuses on video and image annotations.

Today's tools often have AI-assisted features, like suggesting labels or snapping to object edges. These features reduce mistakes and speed up the process. A good annotation tool supports common data formats, lets users export results easily, and offers quality control options.

One of the biggest problems in data annotation is inconsistency. Two annotators might label the same text differently, especially if the task isn’t clearly defined. That’s why annotation guidelines are important—they help reduce differences in how data is labeled.

Another major challenge is scale. High-quality labeled data takes time and money to produce. Many companies outsource the task, which can cut costs but may affect the quality. Strong review systems are necessary to catch errors and ensure correct annotations.

Teams control quality using inter-annotator agreement scores, gold-standard examples, and random checks. Before starting, annotators may be trained on sample tasks. Good workflows include both human review and automated checks.

Bias is another concern. If annotations reflect personal or cultural assumptions—such as linking certain phrases to a specific emotion—they can lead to biased models. To avoid this, datasets should be diverse, and annotation teams should follow neutral, consistent guidelines.

Ethical concerns also exist. Many annotators are underpaid, especially in large, crowdsourced projects. Fair pay, clear task instructions, and realistic deadlines are all part of building a more responsible pipeline for machine learning training.

Looking forward, more annotation tools are starting to include active learning—a setup where the model flags only uncertain examples for human review. This helps reduce the total labeling workload while still improving model quality.

Data annotation is the invisible work that powers much of modern AI. Whether tagging an object in an image or labeling customer intent in a support chat, annotation gives machines the information they need to learn. As machine learning grows in everyday applications, the need for well-labeled data will only increase. The process is always evolving from manual labeling to automated tools and smarter workflows. But one thing remains true—no matter how smart the models become, they still need clear, human-labeled data to understand the world around them.

Advertisement

Explore how π0 and π0-FAST use vision-language-action models to simplify general robot control, making robots more responsive, adaptable, and easier to work with across various tasks and environments

Looking to master SQL concepts in 2025? Explore these 10 carefully selected books designed for all levels, with clear guidance and real-world examples to sharpen your SQL skills

Looking for the best podcasts about generative AI? Here are ten shows that explain the tech, explore real-world uses, and keep you informed—whether you're a beginner or deep in the field

Explore the Rabbit R1, a groundbreaking AI device that simplifies daily tasks by acting on your behalf. Learn how this AI assistant device changes how we interact with technology

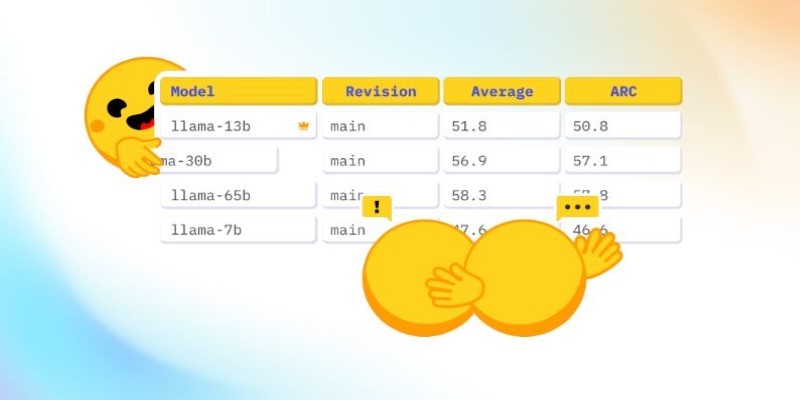

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

How Krutrim became India’s first billion dollar AI startup by building AI tools that speak Indian languages. Learn how its large language model is reshaping access and inclusion

Access to data doesn’t guarantee better decisions—culture does. Here’s why building a strong data culture matters and how organizations can start doing it the right way

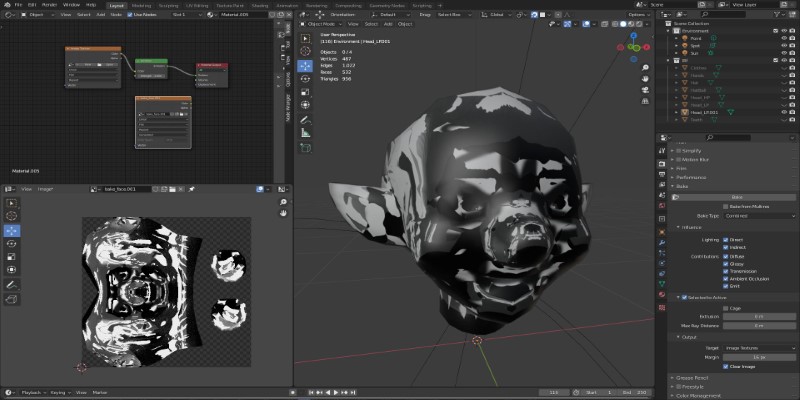

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

Understand how deconvolutional neural networks work, their roles in AI image processing, and why they matter in deep learning

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

Hugging Face and JFrog tackle AI security by integrating tools that scan, verify, and document models. Their partnership brings more visibility and safety to open-source AI development