Advertisement

Running a language model on your phone might sound like something only engineers in big labs do, but that’s no longer the case. With recent improvements in on-device processing, edge AI has become surprisingly accessible. If you're curious about how to run a large language model (LLM) directly on your phone using React Native, you're in the right place. This guide strips away the hype and keeps things simple—so you can understand how it works, what you need, and how to make it run. No jargon, just hands-on help.

Let's get what we mean by "LLM inference on the edge" out of the way. Simply put, inference is when a trained model spits out answers, predictions, or completions based on what you give it. When you do that on a phone without having anything go to a server, you do it on the edge. The "edge" simply refers to it happening on a local device, such as your smartphone, instead of somewhere in another data center.

This matters for a few reasons. First, it improves privacy because the data doesn’t leave your phone. It also reduces latency, which means faster responses. And for people with limited or no internet access, edge inference allows them to still use AI apps offline. It may not have the horsepower of a full server, but it’s useful for smaller tasks and quick interactions.

Now, combine that with React Native, a popular framework for building mobile apps using JavaScript. You write your code once, and it works on iOS and Android. Putting these pieces together means you can build cross-platform apps that include AI without needing a constant connection to the cloud.

To get started, you'll need to set up a React Native environment. If you've built apps before, the basic process is the same. What makes it different is adding a lightweight LLM that can run locally on the device. You won't be using massive models like GPT-4 here—they’re too big for phones. Instead, you’ll use smaller, quantized models like TinyLlama or distilGPT.

Quantization is a way to compress models without completely ruining their accuracy. These models are stripped-down versions designed to take up less space and use less memory. Tools like GGML (for C-based inference) or llama. CPP makes it possible to run them efficiently on mobile hardware.

To use one of these models in React Native, the most straightforward approach is using a native bridge. React Native lets you write parts of your app in native code—Java for Android or Swift for iOS. These bridges allow your JavaScript code to call native functions, so you can link to the model running in C/C++ through a library like llama.cpp.

There are two approaches: bundle the model with the app or download it after installation to reduce the app's size. Bundling might be acceptable for smaller models under 100MB. However, anything larger can be downloaded as needed and stored in local storage using React Native's filesystem APIs.

One key detail: mobile processors are improving, but running LLMs takes time. You may need to run inference in a background thread to keep your app responsive. This is done on the native side, where the model's processing happens outside the JavaScript thread to avoid freezing your UI.

Now comes the fun part—what can you do with this setup? You won't be writing a mobile version of ChatGPT, but you can still make a lot. Think of AI features that need quick, localized interactions without a cloud dependency.

For example, you can build a personal note assistant that works offline. It could help summarize text, suggest phrasing, or correct grammar as you write. You could also create an interactive learning app that responds to student questions or offers simple explanations. Some developers have even used LLMs on phones to power chatbots for therapy or journaling that work offline—keeping conversations private.

The key is to design the use case around what these smaller models can handle. Keep prompts short and responses concise. Think of them more as smart text processors than full AI companions. You’re not training the models—just using them in their pre-trained state to do light, meaningful tasks.

On the front side, React Native makes this easy. You build familiar input boxes, buttons, and display areas using components like TextInput and FlatList, then connect them to the model’s output through native bridges. The UI doesn’t need to change much—you’re just swapping a remote API call for a local function call.

Running LLMs on mobile devices brings clear benefits, but it’s important to understand the practical limits. Memory usage is a major factor. Even smaller, quantized models can take up a lot of RAM, which may lead to crashes or slow performance if not managed properly. Keeping your prompts short and unloading unused data helps prevent these issues.

Battery consumption is another concern. Since on-device inference is CPU-intensive, frequent usage can drain a phone quickly. It’s better to keep interactions brief and avoid constant background processing.

Testing across devices is also more demanding. Simulators often can’t handle the native modules required to run these models, so real device testing becomes essential. Lastly, inference speed won’t match server-side performance. Responses can take a second or two, especially on mid-range phones. That’s fine for short replies but less suitable for long-form output. These tradeoffs are worth managing when building practical, responsive mobile apps.

Using React Native to run LLMs directly on the phone is a smart way to bring AI features closer to users. It works well for lightweight tasks, keeps data on the device, and avoids the need for constant internet access. With careful planning around model size, memory use, and energy impact, useful, responsive tools can be built. This approach opens up new possibilities for mobile development, especially where privacy and offline access matter more than raw speed or scale.

Advertisement

Access to data doesn’t guarantee better decisions—culture does. Here’s why building a strong data culture matters and how organizations can start doing it the right way

Looking to master SQL concepts in 2025? Explore these 10 carefully selected books designed for all levels, with clear guidance and real-world examples to sharpen your SQL skills

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

Gradio's new data frame brings real-time editing, better data type support, and smoother performance to interactive AI demos. See how this structured data component improves user experience and speeds up prototyping

Discover the Playoff Method Prompt Technique and five powerful ChatGPT prompts to boost productivity and creativity

AGI is a hypothetical AI system that can understand complex problems and aims to achieve cognitive abilities like humans

How AI-powered earthquake forecasting is improving response times and enhancing seismic preparedness. Learn how machine learning is transforming earthquake prediction technology across the globe

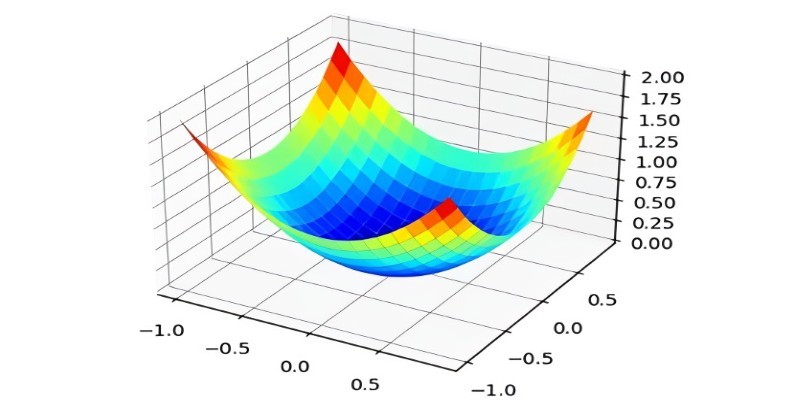

What the Adam optimizer is, how it works, and why it’s the preferred adaptive learning rate optimizer in deep learning. Get a clear breakdown of its mechanics and use cases

How Krutrim became India’s first billion dollar AI startup by building AI tools that speak Indian languages. Learn how its large language model is reshaping access and inclusion

Explore how π0 and π0-FAST use vision-language-action models to simplify general robot control, making robots more responsive, adaptable, and easier to work with across various tasks and environments

A simple and clear explanation of what cognitive computing is, how it mimics human thought processes, and where it’s being used today — from healthcare to finance and customer service