Advertisement

We’re interacting with systems that appear to think, understand, and even learn. But they’re not human. They’re machines built to process information more like we do — only faster and with far more data. This is where cognitive computing steps in. The term sounds technical, but the idea behind it is simple. It's about machines that mimic human thought processes to assist with decision-making.

You might’ve come across it in voice assistants, medical diagnosis tools, or even customer service chatbots that don't feel completely robotic. So, what exactly is going on behind the scenes?

Cognitive computing is all about creating systems that perform more like the human mind — not merely storing or recalling information but comprehending it, reasoning on it, and learning from it. These systems integrate technologies such as artificial intelligence, machine learning, natural language processing, and data mining to interpret information in a manner that is more intuitive and less robotic.

In contrast to traditional computing, where there are fixed instructions and strict rules, cognitive systems adapt. They consider new information in the context of previous experiences, discover patterns, and modify their reactions. That enables them to make suggestions or act that seem thoughtful, even though it's all being processed within a machine. Cognitive computing doesn't replace human thought — it aids it — by performing complicated analysis at a scale and speed we can't.

There are a few core traits that make cognitive systems stand out:

These systems process data with a sense of surrounding circumstances — time, location, emotional tone, prior conversations, and more. That’s why some digital assistants can follow a conversation naturally or respond differently based on the situation.

They never stop updating themselves. As new data flows in, the system refines its output. This ability to learn on the fly helps in environments where conditions shift constantly, like cybersecurity or financial forecasting.

Cognitive systems can understand and respond to human language, whether written or spoken. They break down phrasing, tone, and intent to respond in ways that feel more human than mechanical.

Speed matters. A cognitive tool needs to process information and return results right away, whether it’s flagging a security threat or assisting in a clinical diagnosis. The quicker it thinks, the more useful it becomes.

It’s not a futuristic concept anymore. Cognitive computing is already part of many industries — some visible, some quietly working in the background.

Doctors are now using systems that suggest diagnoses based on thousands of medical journals, clinical trials, and patient histories. These tools help identify patterns a human might miss and bring treatment options that are backed by data, not just gut instinct.

In finance, cognitive systems evaluate market trends, customer behaviors, and transaction histories to guide investment decisions or detect anomalies. They help reduce risk by scanning far more variables than a human advisor ever could.

You’ve likely interacted with a support chatbot that didn’t feel scripted. That’s because it probably used cognitive computing. These systems analyze tone, previous issues, and language cues to resolve issues more naturally.

Retailers utilize cognitive tools to predict what customers might want next based on their browsing habits, purchase history, and current trends. It's the tech behind personalized product suggestions that actually make sense.

Cognitive computing sounds impressive, but it’s far from perfect. There are still some very real limitations and concerns.

These systems are only as good as the data they get. Poor quality data leads to poor results. If the information fed into the system is outdated, biased, or incomplete, the output can be misleading. That’s a risk when dealing with things like medical recommendations or legal advice.

It's not always clear how a cognitive system reaches its conclusion. This lack of transparency can be a problem in sectors like healthcare or law, where understanding the "why" behind a decision matters just as much as the decision itself.

Since these systems rely heavily on vast datasets — many of which include sensitive information — they become attractive targets for cyberattacks. Protecting them requires a level of cybersecurity that matches their complexity.

Implementing a cognitive computing system isn’t cheap. It involves high-end hardware, complex software, and ongoing maintenance. This puts it out of reach for many small businesses, at least for now.

Even with the hurdles, cognitive computing is not slowing down. As hardware gets faster and cheaper, and as algorithms become better at learning and adapting, more industries are starting to explore what’s possible.

In classrooms, cognitive tools can offer personalized learning plans. They can analyze where a student is struggling and adjust teaching methods in real time. That means a more tailored approach to learning — one that adjusts to each student's pace and style.

Law firms are beginning to use systems that comb through thousands of legal documents to find relevant cases and rulings. What used to take hours or even days can now be done in minutes, allowing lawyers to focus more on analysis than on digging through files.

Factories are applying cognitive systems to monitor equipment and predict failures before they happen. It saves time and money and can even prevent dangerous breakdowns.

Some developers are working on systems that can detect changes in language patterns or voice tones that suggest anxiety, depression, or other concerns. These tools aren't replacements for professionals, but they can act as early-warning systems that flag when someone might need help.

Cognitive computing isn’t about machines thinking like humans — it’s about machines assisting humans in thinking better. The ability to process massive amounts of information, understand context, and offer intelligent suggestions makes it one of the most practical developments in technology today. As long as we remain careful about data quality, privacy, and transparency, cognitive computing will likely continue to become a trusted part of everyday decisions — not because it replaces thinking, but because it helps make sense of too much information, too fast, for any person to manage alone.

Advertisement

Looking to master SQL concepts in 2025? Explore these 10 carefully selected books designed for all levels, with clear guidance and real-world examples to sharpen your SQL skills

How AI-powered earthquake forecasting is improving response times and enhancing seismic preparedness. Learn how machine learning is transforming earthquake prediction technology across the globe

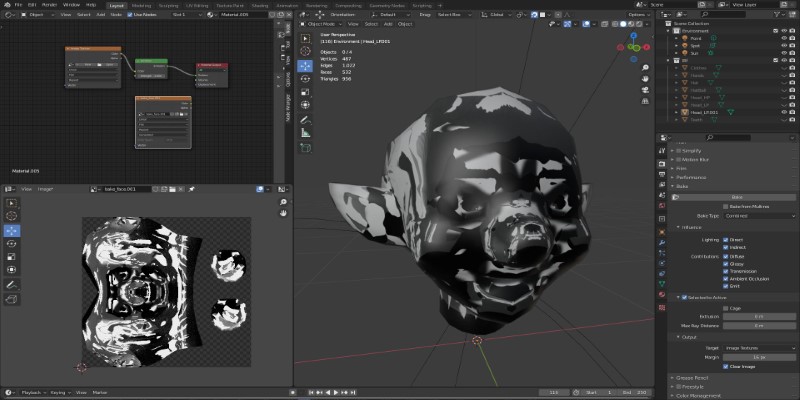

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

Hugging Face and JFrog tackle AI security by integrating tools that scan, verify, and document models. Their partnership brings more visibility and safety to open-source AI development

Explore how π0 and π0-FAST use vision-language-action models to simplify general robot control, making robots more responsive, adaptable, and easier to work with across various tasks and environments

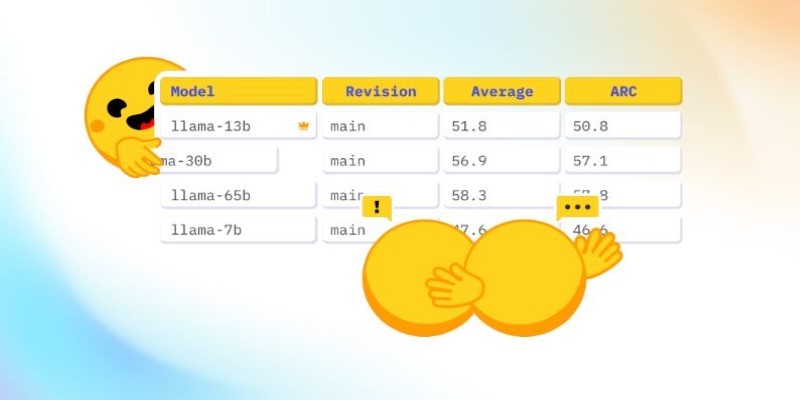

How Math-Verify is reshaping the evaluation of open LLM leaderboards by focusing on step-by-step reasoning rather than surface-level answers, improving model transparency and accuracy

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

Explore the Rabbit R1, a groundbreaking AI device that simplifies daily tasks by acting on your behalf. Learn how this AI assistant device changes how we interact with technology

How One Hot Encoding converts text-based categories into numerical data for machine learning. Understand its role, benefits, and how it handles categorical variables

How temporal graphs in data science reveal patterns across time. This guide explains how to model, store, and analyze time-based relationships using temporal graphs

How Krutrim became India’s first billion dollar AI startup by building AI tools that speak Indian languages. Learn how its large language model is reshaping access and inclusion

What data annotation is, why it matters in machine learning, and how it works across tools, types, and formats. A clear look at real-world uses and common challenges