Advertisement

Robots have come a long way from being stiff machines that only followed pre-written instructions. Researchers are pushing for systems that can understand natural language, process what they see, and act accordingly in real-world environments. π0 and its faster variant, π0-FAST, are at the forefront of this shift.

These models are designed to handle general robot control by connecting vision, language, and action in a more intuitive and adaptable way. They're part of a new generation of AI that treats robot learning less like programming and more like teaching.

π0 and π0-FAST are big vision-language-action (VLA) models at their core. Rather than viewing robot learning as a specific task, these models try to act as general-purpose interfaces. A user can provide a robot with natural-language instruction, and the model translates the command into action—considering what is before the robot and what to do.

The primary model, π0, learns on various tasks, environments, and commands. It accepts visual and text inputs, linking what is being seen with what the user intends. If, for instance, a user instructs, "Grasp the red apple on the left side," the model reads the camera input, identifies the apple, and selects the appropriate motor commands to act. π0 can be used on various platforms and applications—homebots or factory devices—without requiring independent training protocols for each.

π0-FAST is the real-time optimized version of π0. It retains the general intelligence of its larger sibling but has been fine-tuned for faster inference. Milliseconds can make a difference in robotics, especially when reacting to changing environments. π0-FAST cuts down on latency while still making accurate decisions. It achieves this through architectural tweaks and smart caching strategies that reduce the computation needed at run-time.

One of the most challenging aspects of developing general-purpose robot control models is the need for vast, varied data. π0 was trained on an enormous dataset collected from different robots performing thousands of tasks. These tasks ranged from simple object manipulation to more nuanced behaviors like arranging items by color or handing tools to a person.

To make the model generalizable, the training data included not only successful executions but also failures and edge cases. This gave π0 the ability to handle uncertainty and recover from mistakes. Moreover, the instructions varied in phrasing and complexity, which helped the model understand synonyms, paraphrasing, and ambiguous requests.

Rather than training separate models for each robot or task, π0 was designed with modularity. The idea was to create a single model that could plug into different hardware setups. Whether a robot has arms, wheels, or grippers, π0 can adjust its behavior by conditioning on robot-specific input embeddings.

π0-FAST builds on the same principles but uses a distilled version of π0's training pipeline. It focuses on the most frequently encountered tasks and robot types, trimming the data while preserving diversity. This streamlined approach allows it to respond much more quickly while sacrificing very little in generality.

In tests, π0 and π0-FAST could handle many real-world scenarios. Robots controlled by π0 were shown to follow instructions like "Put the banana in the bowl next to the blue cup" with impressive reliability. These aren't hard-coded commands—they’re flexible and contextual. The same sentence can mean different things depending on the environment's layout, the objects present, or the lighting.

What stands out about π0 is its ability to adapt mid-task. If a robot is asked to hand over an object and the person moves, π0 recalculates its plan and adjusts the motion without requiring a full reset. This behavior comes from its integrated view of language, perception, and motor control. It doesn’t just remember a sequence of steps; it understands the goal.

π0-FAST proved its worth in time-sensitive environments, such as interactive demonstrations or mobile robotics, where every delay matters. It provides nearly the same instruction-following accuracy as π0 but responds in a fraction of the time. This makes it ideal for robots that need to work around humans, where speed and safety are tightly linked.

Another important feature is zero-shot generalization. π0 and π0-FAST can often complete tasks they've never seen before simply because they understand the language and visual patterns well enough to make educated decisions. This makes them far more flexible than traditional robots that depend on scripted behaviors.

The appeal of models like π0 isn’t just about making robots smarter—it’s about making them more usable. Most people don’t want to learn code or robot-specific instructions for basic tasks. Talking to a robot and having it understand is a major step toward practical use.

π0 and π0-FAST enable a single model to support many robots—at home, warehouses, labs, or hospitals. They reduce the need for costly retraining. Rather than building new models for every use case, developers can fine-tune or use the existing ones.

Combining vision, language, and action allows for more natural learning. Future versions might learn from observing people, reading manuals, or understanding diagrams. They could explain their actions, ask questions, or adjust based on feedback. This isn't just a concept—it’s starting to work in real settings.

π0-FAST shows that fast response and high performance can go hand in hand. It lets developers build robots that respond smoothly in homes or workplaces. Robots that can listen, see, and act with intent change what they can do.

π0 and π0-FAST shift how robots are trained and controlled. By merging language, vision, and motor control, they make robots more capable, flexible, and easier to use. Users give natural instructions; the model handles the rest. Their ability to generalize across tasks, adapt to different hardware, and respond quickly marks a major step. As this approach improves, robots will feel less like machines to manage and more like helpers who understand.

Advertisement

AGI is a hypothetical AI system that can understand complex problems and aims to achieve cognitive abilities like humans

How AI-powered earthquake forecasting is improving response times and enhancing seismic preparedness. Learn how machine learning is transforming earthquake prediction technology across the globe

How Arabic leaderboards are reshaping AI development through updated Arabic instruction following models and improvements to AraGen, making AI more accessible for Arabic speakers

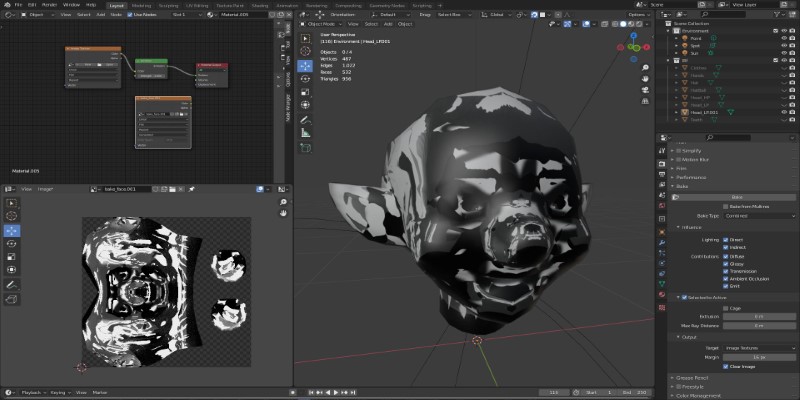

Learn how to bake vertex colors into textures, set up UVs, and export clean 3D models for rendering or game development pipelines

How One Hot Encoding converts text-based categories into numerical data for machine learning. Understand its role, benefits, and how it handles categorical variables

What data annotation is, why it matters in machine learning, and how it works across tools, types, and formats. A clear look at real-world uses and common challenges

How Krutrim became India’s first billion dollar AI startup by building AI tools that speak Indian languages. Learn how its large language model is reshaping access and inclusion

Access to data doesn’t guarantee better decisions—culture does. Here’s why building a strong data culture matters and how organizations can start doing it the right way

Understand how deconvolutional neural networks work, their roles in AI image processing, and why they matter in deep learning

How to run LLM inference on edge using React Native. This hands-on guide explains how to build mobile apps with on-device language models, all without needing cloud access

Natural language generation is a type of AI which helps the computer turn data, patterns, or facts into written or spoken words

Hugging Face and JFrog tackle AI security by integrating tools that scan, verify, and document models. Their partnership brings more visibility and safety to open-source AI development